|

|

Home → News 2022 June

|

04.June.2022

Ontonics Superstructure #27

For the location of a South American gigahub of our World Wide Hover Association (WWHA) Transcontinental Network respectively Silk Skyway with its hubs, superhubs, megahubs, and gigahubs we are looking at the city and urban area around

06.June.2022

08:00 and 23:22 UTC+2

Short summary of clarification

We have finalized the quoting of other works and are now finalizing the initial commenting of them in the Clarification of the 8th of May 2022.

Missing are some more comments, more order of the comments, better explanations of our works and better corrections of others nonsense in the comments, and an epilog.

But several most important results can already be recognized:

- Agent-Based System (ABS), including

- Intelligent Agent System (IAS),

- Cognitive Agent System (CAS), and

- Multi-Agent System (MAS), including

- Holonic Multi-Agent System (HMAS) or simply Holonic Agent System (HAS),

- Model-Based Autonomous System (MBAS) or Immobile Robotic System (ImRS or Immobot),

- Cognitive Architecture or Cognitive System,

- Cybernetical Intelligence (CI),

- evolvable architecture,

- Semantic (World Wide) Web (SWWW), including Linked Data (LD),

- fusion of realities,

- etc..

07.June.2022

12:11 and 14:55 UTC+2

Short summary of clarification

More most important results, that can already be recognized:

And we also shared our impression about the highly suspicious coincidence with The Proposal. We said to mark it, but now we will simply substitute it with the original works.

In relation to ontology in general and ontology as used in the fields of Artificial Intelligence (AI), Knowledge Management (KM), Natural Language Processing (NLP), and Semantic (World Wide) Web (SWWW) there is no direct reference by the TUNES OS and our Evoos. But while the TUNES OS focuses on the field of operating system (os), our Evoos adds the fields of

among many other fields.

Furthermore, our Evoos is the foundation of the fields of

This leads us back to the beginning respectively the latest clarifications about the fields of

Specifically what has been added by our Evoos is what makes the SWWW the DSW the Web x.0 and eventually the failure the success the revolution, as usual when we take the helm.

And there will be a Universal Ontologic (UO) or Global Ontologic (GO), including a Universal Ontology (UOL) or Global Ontology (GOL), which is evolvable, dynamic, and incorporates parts of all other ontologies, DataBases (DBs), Knowledge Bases (KBs), and so on, which also includes digital maps, digital globes, and other multimodal things, and is common to all.

If and only if (Iff.) we takeover the company Alphabet (Google) through our Society for Ontological Performance and Reproduction (SOPR) for a truly reasonable price (note damages, fees, royalties, and no payment for our rights and properties), then a part of its Graph-Based Knowledge Base (GBKB) or Knowledge Graph (KG) would become the (initial or foundational) GOL. Alternatively, we will build it up from a void or a blank.

For sure, one can discuss every detail and potential mistake of us in relation to these fields, but at the end of the day the overall situation will not be changed.

As in the case of the novel titled "The Old Man and the Sea" and written by Ernest Hemingway in the year 1951, the sharks gnawed off the marlin fish completely, but the remaing (endo)skeleton was secured.

But in the follow-up part written by C.S., the sharks need the skeleton to survive somehow.

And this is our core and we will not discuss long about the matter with politicians, scientists, managers, and other persons:

All or nothing at all. Sign, pay, comply.

See the section Further steps [Inviting letter] of the issue SOPR #33m of the 2nd of May 2022 for more details.

09.June.2022

19:10 and 21:00 UTC+2

Ontonics Further steps

We have once again adjusted our overall business plan, specifically the investment and development plans and the sequence of steps, to adapt to the latest decisions of governments and industries.

But this adjustment respectively adaption does not affect the volumes of investments, the sizes of locations, and the counts of jobs.

11.June.2022

13:36 and 16:10 UTC+2

Short summary of clarification

Now we are into the subject and get it all together again. But it is still a little different. Honestly, we wondered a little about what we were working on the Clarification of the 8th of May 2022 and discussing other works and their relations to our works, but we already referenced the Clarification of the 28th of April 2016. :D

So we have to correct a lot. At this point we already note that we have moved some content of the Clarification of the 8th of May 2022 to the other Clarification of the 28th of April 2016, because it is also a work in progress and belongs to the earlier clarification.

Indeed, we have studied the subfields of proemiality and polycontexturality and PolyContextural Logic (PCL) of the field of Cybernetics, but we also studied the fields of Chaos theory or chaos and order, fractality and {?}holoiconicity, holonicity, holonic, holistic, and holologic, when working on our Evolutionary operating system (Evoos) described in The Proposal and The Prototype.

We already began the discussion in the Clarification of the 28th of April 2016 about the various spectra, such as the

which others and C.S. showed to be intertwined with each other seamlessly.

This also points to the three-layer architecture or hybrid architecture in the field of Agent-Based System (ABS) consisting of

See for example the following works:

But our Evoos has a dynamic, reflective, metamorphic, flexible meta-layer structure or architecture, which can have one to infinity many layers as required (at runtime). This is also the reason why we describe the Ontologic System Architecture (OSA) as a special abstration of a layered system architecture, which requires the selection of a specific point in space and time and also a specific view to see its structure, and as being liquid, which also relates it to the atom model (e.g. position of an electron) and the CHemical Abstract Machine (CHAM) for example. See also the fields of Holonic Manufacturing System (HMS) and Holonic Multi-Agent System (HMAS) or Holonic Agent System (HAS). What was missing in 1999 was a Distributed HAS, which is the integration of the fields of Distributed operating system (Dos) and HAS, and which is given with our Evoos.

We were also engaged with the relations of the fields of semantics to syntax to semiotics to kenogrammatics, which also led us to the fields of proemiality and polycontexturality. But as deeper we went to find a common, ultimative, and universal ground as more esoteric and irrational the matter became. To show the latter we decided to quote works of the related fields broadly and extensively, so that everybody can get this impression for what we mean when talking about too much talks and blah blah blah, too few solutions, no risks to make decisions, and also subjectivity, esoterics, and irrationality.

Some say the universe is a very big number or so, others say mathematics is the language of the universe, and others say the universe is a computation. At least, one can observe a development and transformation.

The author of "Derida's Machines" discusses the characteristics and the very nature of numbers.

But the syntax, signs, and semiotics of number systems and modern mathematics are a fractal. In fact, it does not matter which signs one uses for counting and calculating, the results are always the same, but only the length of the numbers or strings used to describe the numbers and the results of computation become shorter, if one has more signs available to express a result.

This led to our conclusion that all must be one, including the fields of logics, mathematics, cybernetics and their classical logics, non-classical logics, Fuzzy Logic (FL), Arrow Logic (AL), PolyContextural Logic (PCL), holologic, and so on, and also their objectivity and subjectivity and proemiality. And the only truly rational concept, that meets all aspects and requirements, is not the proemiality, polycontexturality, Arrow System, or similar ... things, ideas, concepts, approaches, theories, and so on, but the relationships are a fractal moving, operating, or executing in its own fractal structure respectively in itself as translations or transformations or morphisms of itself.

Correspondingly, our Zero Ontology or Ontological Zero does not represent an empty set or zero, like in logics, mathematics, or other fields, as also discussed by the author of "Derida's Machines", but a point or location in the fractal, which can also be interpreted and written as a string of one or more zeros 0 or 00 or 000 or 0...0. It is not a beginning and it is not an ending, it has no beginning and it has no ending, exactly as required by theologies, philosophies, mathematics, cybernetics, and so on. The latter also solves the problems with 0 and infinity, as discussed in cybernetics.

We also have the duality represented for example with 0 and 1, and we have the interval between or the range from 0 and 1 or the spectrum or continuum of 0 and 1.

Some examples

count of signs 2; length 1; decimal (2^0)

0 (0)

1 (1) (1 = 2^1 - 1)

count of signs 2; length 2; decimal (2^1 2^0)

0 0 (0)

0 1 (1)

1 0 (2)

1 1 (3) (3 = 2^2 - 1)

count of signs 2; length 3; decimal (2^2 2^1 2^0)

0 00 (0)

0 01 (1)

0 10 (2)

0 11 (3)

1 00 (4)

1 01 (5)

1 10 (6)

1 11 (7) (7 = 2^3 - 1)

count of signs 3; length 1; decimal (3^0)

0 (0)

1 (1)

2 (2 = 3^1 - 1)

count of signs 3; length 2; decimal (3^1 3^0)

0 0 (0)

0 1 (1)

0 2 (2)

1 0 (3)

1 1 (4)

1 2 (5)

2 0 (6)

2 1 (7)

2 2 (8 = 3^2 - 1)

count of signs 3; length 3; decimal (3^2 3^1 3^0)

0 00 (^0)

0 01 (1)

0 02 (2)

0 10 (3)

0 11 (4)

0 12 (5)

0 20 (6)

0 21 (7)

0 22 (8)

1 00 (9)

1 01 (10)

1 02 (11)

1 10 (12)

1 11 (13)

1 12 (14)

1 20 (15)

1 21 (16)

1 22 (17)

2 00 (18)

2 01 (19)

2 02 (20)

2 10 (21)

2 11 (22)

2 12 (23)

2 20 (24)

2 21 (25)

2 22 (26 = 3^3 - 1)

count of signs 10; length 1; decimal (10^0)

0

1

2

3

4

5

6

7

8

9 (9 = 10^1 - 1)

count of signs 10; length 2; decimal (10^1 10^0)

0 0

0 1

0 2

0 3

0 4

0 5

0 6

0 7

0 8

0 9

...

9 0

9 1

9 2

9 3

9 4

9 5

9 6

9 7

9 8

9 9 (99 = 10^2 - 1)

count of signs 10; length 3; decimal (10^2 10^1 10^0)

0 00

0 01

0 02

0 03

0 04

0 05

0 06

0 07

0 08

0 09

...

0 90

0 91

0 92

0 93

0 94

0 95

0 96

0 97

0 98

0 99

1 00

...

1 99

2 00

...

2 99

3 00

...

3 99

4 00

...

4 99

5 00

...

5 99

6 00

...

6 99

7 00

...

7 99

8 00

...

8 99

9 90

9 91

9 92

9 93

9 94

9 95

9 96

9 97

9 98

9 99 (999 = 10^3 - 1)

count of signs 16; lenght n; decimal (16^n-1 ... 16^0)

max 16^n - 1

count of signs i; length n; decimal (i^n-1 ... i^0)

max i^n -1

And so on.

Computing with Words (CW or CwW)

= 0

a = 1

b = 2

c = 3

...

z = ...

Unicode Standard

Unicode Transformation Format (UTF), extended American Standard Code for Information Interchange (ASCII), variable-width encoding

UTF-8

See also for example

Abstract Machines (AMs)

Virtual Machines (VMs)

Abstract Virtual Machines (AVMs)

Operating systems (oss)

And so on.

But the other question besides the question who created the fractal, mathematics and logics, and life, is where the dynamics come from. Here we have the space and time and ontology with its order what existited before and what came after (see also arrow of time, enthropy, thermodynamics, etc.).

Furthermore, if one assumes a kind of complexity or an interplay of chaos and order, which is related to fractal structure or fractality, and self-similarity, as shown in for example the book titled

which leads to self-referentiality and self-organization, as shown in for example the books titled

and leads further to the contents of for example the books titled

and also the works referenced in the

- Algorithmic/Generative/Evolutionary/Organic ... Art/Science,

- Exotic Operating System,

- Intelligent/Cognitive Agent, and

- Intelligent/Cognitive Interface,

then this observable universum must be the part of at least one of all possible universes, which has a fractal structure. This also explains why the Fibonacci sequence and the Golden Ratio Phi are so interesting, indeed one can find direct connections of them to all these fields, why we have "Just Six Numbers [of] The Deep Forces That Shape the Unvierse", and why the concept of the multiverse is not so esoteric.

Best of all is that a fractal is absolutely rational.

Subjectivity can be or even is an objective number or rational number.

This all leads us back to the start of the discussion about the various spectra.

In this situation the problem is to get from one location in the fractal to another location in the fractal by finding another (location in the) fractal for the translation, transformation, or morphogenesis. And at this point complexity hammers in, because now we are in the fields of

We are not aware of any other works of art and oeuvre like this, specifically our Evoos and our OS. Indeed, we have a lot of works, that discuss parts or excerpts of the big picture and therefore are referenced by us, but there is nothing existing since ever, which integrates all in one, into one absolutely sound, homogeneous, and consistent Ontologics, Theory of Everything (ToE) with a Caliber/Calibre, Reality operating system, fusion of realities or New Reality, Ontoverse, and so on.

13.June.2022

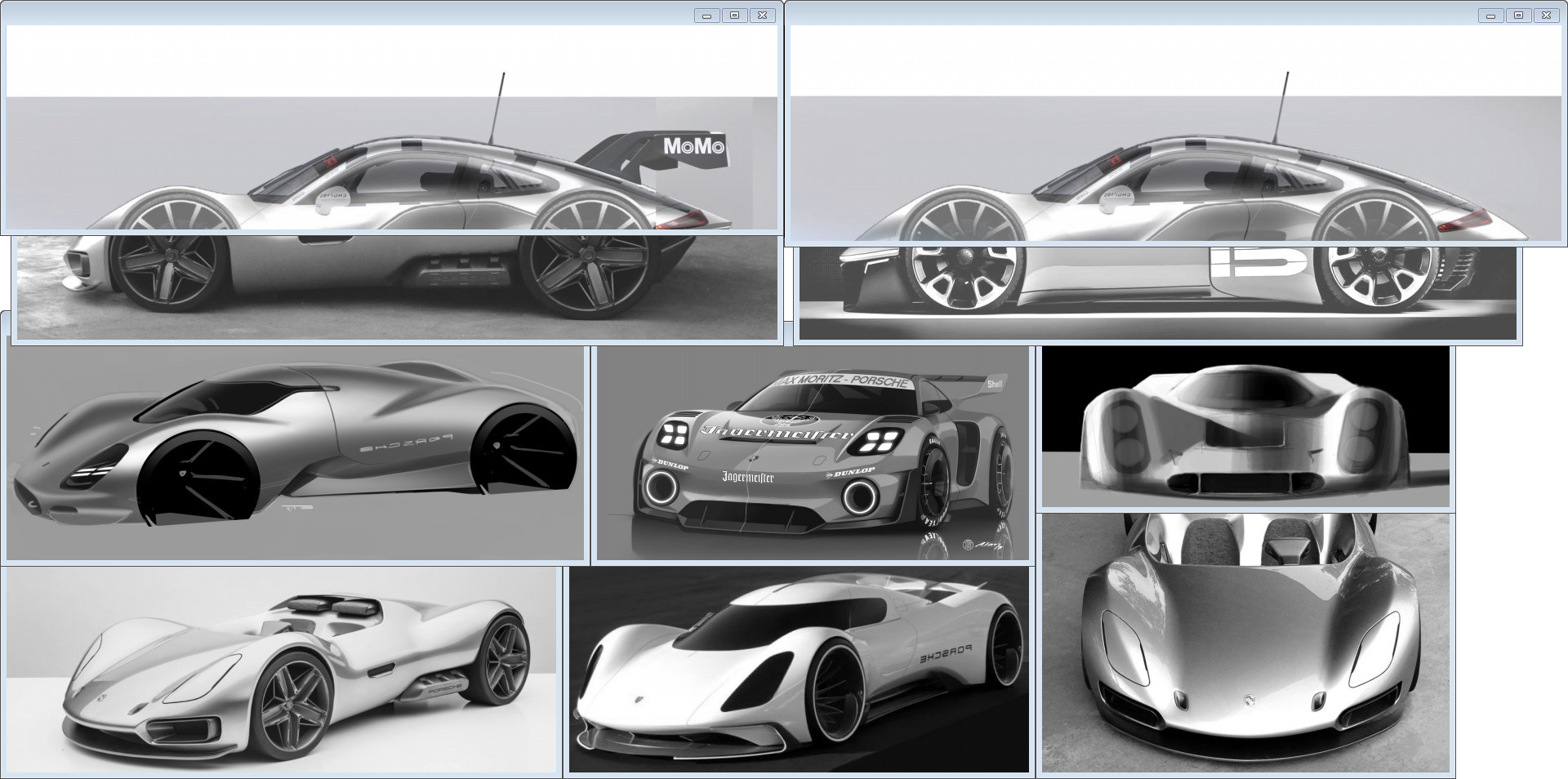

Style of Speed Further steps

As mentioned in earlier Further steps, we have some design directions for our 91x project, as shown in the two collages below.

The first collage shows designs of the front and side sections (from left to right and top to bottom):

1. Porsche, Marco Brunori, 911 Pounds (inspired by SoS 9EE series), 991 GT3 RSR 2017, and Mission R rear wing, and Tom Harezlak, 903

2. Porsche, Marco Brunori, 911 Pounds (inspired by SoS 9EE series), 991 GT3 RSR 2017, and Vision 916 (inspired by SoS E-Conversion series)

3. Edward Tseng, GT

4. Alan Derosier, 931 Slant Nose Jägermeister (inspired by SoS 9x9 and 91x projects)

5. Yann Jarsalle, 917

6. Tom Harezlak, 903

7. Gilsung Park, Electric Le Mans 2035 (inspired by SoS Street Legal series, for example 962 ST with 2000 PS and other models)

8. Tom Harezlak, 903

© Listed companies, designers, and photographs, and Style of Speed

The second collage shows designs of the rear section (from left to right and top to bottom):

1. Porsche, Vision Gran Turismo 2021 (both alternative designs of the rear section of 911 Pounds project, one design of rear section of 911 Pounds was also shown before with Mission R, because of SoS 9x9 RSR and 91x projects)

2. Porsche, Marco Brunori, 911 Next Generation

3. Radek Stepan, Hover Porsche (inspired by SoS Speeder series)

4. Porsche, Marco Brunori, 911 Next Generation

5. Volkswagen, Min Byungyoon, 911 100 (lowest largest image)

6. Geely and Etika Automotive, Lotus, Evija (inspired by Style of Speed Street Legal, for example 962 ST with 2000 PS and other models)

7. Porsche 919 Street (inspired by Style of Speed Street Legal series, for example 962 ST with 2000 PS and other models)

8. Artem Popkov, 911 with mid-engine layout (inspired by SoS 9x9 Modern project)

9. Porsche, Marco Brunori, 911 Next Generation, 959 Hommage (inspired by SoS 9x9 935 Hommage project also copied by Porsche with 991 GT2 RS 935 Hommage)

© Listed companies, designers, and photographs, and Style of Speed

As one can see, the holes in front of the rear wheels at the sides of our 91x have an aerodynamic function.

But missing is one more thing, which is our next world's first.

15.June.2022

17:30 and 17:55 UTC+2

Ontonics Further steps

We will talk with the company Alphabet (Google) about its takeover and the related plan, like for example the one discussed in the Further steps of the 4th of November 2018.

If there is an interest for realizing this act, then we can begin with the big calculation of the damages, royalties, evaluations, and set-offs, but we are not sure if the result will be positive or negative for Alphabet and therefore if a reasonable compensation is justified.

In addition, the activities in relation to the undisclosed field and the company Amazon might have got another additional huge momentum by the very potential join up of another not so small undisclosed entity. And yes, it is about the Next Superbolt™ New ...™ Blitz™.

We are also continously looking at other takeover candidates.

King Smiley Further steps

Let us discuss the

in this little village.

The rest of the world does not sleep, too. :)

19.June.2022

22:55 and 29:55 UTC+2

OpenAI GPT and DALL-E are based on Evoos and OS

might become Clarification or Investigations::AI and KM

*** Revision - correction and better explanation ***

Certain combinations and integrations of the fields of

are around 25 years old stuff and work better and better by brute force respectively more power of computers.

See for example the following works in case of EC and ANN:

The authors propose a method based on biological metaphors to find automatically good Modular Artificial Neural Network (MANN) structures using a computer program. They argue that MANNs have a better performance than their non-modular counterparts and that the human brain can also be seen as a Modular Neural Network (MNN) and therefore propose a search method "based on the natural process, that resulted in the brain: Genetic Algorithms are used to imitate evolution, and L-systems [(a Lindenmayer system is a parallel rewriting system and a type of formal grammar)] are used to model the kind of recipes nature uses in biological growth".

See also the Ontologic roBot (OntoBot) component based on the fields of

- Rewriting Logic (RL),

- Algorithmic Information Theory (AIT),

- fractal set,

- chaos theory,

- complex system theory,

- adaptive system,

- Algorithmic Botany, and

- other works referenced in the section Algorithmic/Generative/Evolutionary/Organic ... Art/Science of the webpage Links to Software of the website of OntoLinux.

The author concludes that the application of Genetic Algorithm (GA) for the synthesis of Artificial Neural Networks (ANNs) using Cellular Encoding (CE) is Genetic Programming (GP) of Artificial Neural Networks (ANNs) (GA + CE = GP of neural networks). Consequently, the synthesis is called a Genetic Neural Network (GNN) and the synthesis method needs no learning.

Specifically interesting and important is that CE

- is a method for encoding families of similarly structured boolean neural networks, that can compute scalable boolean functions,

- is based on modularity and Modular Artificial Neural Network (MANN) or simply Modular Neural Network (MNN), and

- is a parallel graph grammar that checks a number of properties.

The authors conclude that the application of Genetic Algorithm (GA) is inappropriate for network acquisition and applies Evolutionary Programming (EP) to construct a Recurrent Neural Network (RNN) directly with the GeNeralized Acquisition of Recurrent Links (GNARL), which simultaneously acquires both the structure and weights without an intermediate Cellular Encoding (CE) step.

The authors apply Evolutionary Programming (EP) to construct a Feedforward Neural Network (FNN) directly. Consequently, the approach is called EPNet.

{better explanation required}

But the genetic synthesis of ANNs does not include integrations of other subfields of SoftBionics (SB), also called as Multimodal SoftBionics (MSB or MMSB) and including the subfields of

specifically

and utilizations for behaviour-based Robotic System (RS), or being more precise, an integrated physical humanoid robot, other fields related to Cognitive Computing (CogC), human-like computing, and so on.

{better explanation required} But due to the lack of symbol grounding

are possible.

Our Evolutionary operating system (Evoos) was created for these technologies, applications, and services.

{end of correction and better explanation}

We also quote a webpage, which is about the gas network or simply gas net in the context of Machine Learning (ML), Artificial Neural Network (ANN), and Evolutionary Computing (EC), was presented at the International Conference on Artificial Neural Networks in September 1998, and was publicated on the 3rd of October 1998: "Gas on the brain

[...]

[...] One of the chief routes towards this goal has been to increase the number of nodes and the richness of their interconnections. So how is it that researchers [...] have managed to create devices with the capability of large, complex neural nets that consist of just a handful of nodes and sometimes not a single interconnection?

The answer, which is inspired once again by the workings of the human brain, lies in a virtual gas. The researchers' approach [...] opens the way for a new generation of powerful, lean computers, which they call "gas nets". The notion of gas nets is also giving neuroscientists a way to improve their simulations of the workings of the brain. "It represents a considerable step forward in understanding biological and artificial neural networks," [...].

Though neural computers are based on the brain, they are actually pretty imperfect models - and not just because they have such paltry numbers of nodes and interconnections. A brain cell fires off an electrical impulse when the sum of the signals it receives from other neurons reaches a certain threshold. This much is copied by neural networks. A node carries out a mathematical procedure - which may be simple addition or something more complicated - on the inputs it receives from other nodes. If the result is above a certain threshold, then it fires an output.

In the brain, neurons are separated by gaps, called synapses, and communication across these tiny chasms is carried out by chemical messengers, called neurotransmitters. So an impulse from one neuron must first be converted into a neurotransmitter, which is then converted back to electricity by the receiving neuron. To complicate the picture, different synapses have different effects on the receiving neuron - some may stop it firing, for example - and the effects change over time.

To mimic these effects, the wires between nodes in neural computers carry a variable weighting: each one may increase or attenuate the signal it carries. This is the key to how neural computers "learn". A network is "trained" to recognise different patterns of input signals by changing the weights and firing thresholds of the nodes until it produces the required output.

However, computer scientists have largely ignored synaptic chemistry. As a result, neural computers miss out many subtle effects that take place in the brain. Sometimes, for example, a neurotransmitter released at one synapse can change the way the receiving neuron responds to signals arriving at its other synapses - either boosting or blunting them.

Nor are all these "neuromodulatory" effects confined to interconnected neurons. A decade ago, brain researchers were surprised to find that a neurotransmitter could spread its modulatory message to distant neurons. [...] That chemical is nitric oxide (NO).

Because NO is so much smaller than other neurotransmitters, it can pass unhindered through cell membranes. And when the gas meets a neuron with a NO receptor, it can raise the amount of neurotransmitter released by that neuron in response to an electrical impulse. In effect it amplifies the neuron's influence on the cells it feeds into. The discovery of NO's long-range abilities demolished the notion that neurons communicate only via synapses and only with their neighbours. It also showed that an artificial neural network with nodes connected by wires alone was not just an imperfect model of the brain, but a pale shadow of it.

Whiff of gas

The work [...] brings neural computing closer to current thinking in neuroscience, by adding a virtual equivalent of NO. It is taking place at the Centre for Computational Neuroscience and Robotics, a unit set up in 1996 to encourage neuroscience and computing researchers to talk to one another. It was here that [a] neuroscientist [...] told [...] a specialist in evolutionary robotics, about having gas on the brain. "I didn't know about NO at all until about a year ago," says [a specialist in evolutionary robotics]. "It struck me immediately that it was interesting from a control engineering point of view. I saw that gases could modulate the network without changing the wires."

Together with his colleague[s a specialist in evolutionary robotics] has developed methods for creating software simulations of neural networks by harnessing the power of evolution. His networks act as controllers for robots, allowing them to perform simple tasks [...]. To start with, [a specialist in evolutionary robotics] used conventional neural networks. But after talking to [a neuroscientist] he decided to add a whiff of gas to see what would happen.

To create one of his controllers, [a specialist in evolutionary robotics] uses a genetic algorithm which treats the features of a network as though they are genes to be passed from one generation to the next. The number of nodes, the patterns of wiring between them, the weightings applied to those wires and the firing thresholds of the nodes are all thrown into the genetic mixer. For robots using a camera to see, [a specialist in evolutionary robotics] also allows the algorithm to choose any number of pixels from the camera image and how they connect to the nodes.

Next, a computer generates 100 different networks, all with randomly chosen values for the features. Each network is tested to see how well it performs, using a computer simulation of the [given] problem [task]. Poorly performing networks are thrown out, but the better networks are allowed to reproduce by swapping a gene - a feature's value-here and there. The values assigned to a feature can also change at random, mimicking the mutations that happen in nature. The new networks created in this way are then tested once more and the whole cycle repeated. Successive generations yield networks that do a better and better job of guiding the robot to its goal, until eventually an optimal solution emerges.

The random nature of the evolutionary process means that the genetic algorithm does not converge on the same "best" network every time it runs. [A specialist in evolutionary robotics] hoped that adding gas would increase the number of ways that networks could evolve, and perhaps generate simpler solutions. But there was a problem.

"There isn't any space or time in conventional neural networks," says [a specialist in evolutionary robotics]. For virtual NO [gas] to have any effect, the positions of all the nodes would have to be known, together with some way to describe how the gas diffuses over time. To keep things simple, [a specialist in evolutionary robotics] and his colleagues limited the nodes to a flat surface, rather than three-dimensional space, and used a fairly crude description of how the gas would diffuse in a growing circle.

So, the genetic algorithm for the gas net has to take into account the positions of nodes, the "firing threshold" at which a node will emit the gas, the speed of diffusion of the gas and whether the receiving neurons became more or less sensitive to incoming signals. All this on top of the features of a regular neural net.

Working with [a ...] student, [a specialist in evolutionary robotics] decided to repeat a series of tasks previously tackled by [a mathematician]. Using genetic algorithms, [a mathematician] had consistently evolved conventional neural networks for the [given problem] task after about 6000 generations. A typical successful network used 46 nodes, well over 100 wires and eight pixels from the camera's output.

By comparison, [a specialist in evolutionary robotics] and [a student] found that a gas net capable of guiding a robot to a [goal] rarely needed more than 1000 generations, and in some cases they emerged after only a couple of hundred. The gas nets were also far simpler than [a mathematician]'s. A typical gas net used between 5 and 15 nodes and only two or three pixels. Even more remarkable, the nodes of the gas nets were connected by hardly any wires: they influenced one another mostly via the virtual gas.

"This demonstrates the power provided by having two distinct yet interacting processes at play. Signals are flowing down the wires connecting the nodes at the same time as the gas modulates the properties of the nodes," says [a specialist in evolutionary robotics]. "Structurally simple yet dynamically sophisticated networks could be really useful in, for example, space missions, where you need minimalist systems."

Route finder

[...]

[...] One curious aspect of this experiment is that some of the final gas nets operated without any gas at all, although all of them used gas during their evolution. This suggests the gas played a key part in the learning process of the networks.

[A neuroscientist] finds this particularly interesting. He and other neuroscientists suspect that NO [gas] has an important role in learning and memory in the brain. "One thing that happens as a result of learning is that the structure of the nervous system changes," says [a neuroscientist]. If a synapse is used frequently, the amount of neurotransmitter that crosses it increases, so the transmitting neuron has a greater effect on the receiving cell. This synaptic "strengthening" leaves a long-term memory of prior brain activity. But how does it happen? As NO [gas] can diffuse backwards [and hence bidirectional] across synapses from the receiving to the transmitting neuron, it looks like a strong candidate.

[...]

But what of the strategy of building ever bigger networks with more and more complicated wiring? This is the approach, for example, of Hugo de Garis [...]. [A specialist in evolutionary robotics] is sceptical of this approach. "Often by adding more connections you tend to screw up something that is just starting to work," he says. "Gas effects aren't permanent. They're only active when necessary. It's a gentler kind of effect."

Gas makes neurons more plastic, says [a neuroscientist]. "For any connectivity pattern you can have a number of different behaviours depending on the way it is modulated by gas," he says. "Neurons in the circuit are different at different times."

It is too early to tell where NO [gas] will eventually lead. "This may send neural network research off in a new direction," says [a specialist in evolutionary robotics]. His next goal is to develop gas-net robots with more complex behaviour than any robot controlled by a neural net. "If you want to build machines with anything like significant levels of intelligence, we think that wires and nodes will not be enough," he says. "You'll also need an artificial pharmacology."

Comment

Our Evolutionary operating system (Evoos) was created for these technologies, applications, and services.

A similar approach respectively a rudimentary simulation of gas networks is the attention mechanism, specifically the cross-attention respectively encoder-decoder attention mechanism between an encoder and a decoder of a transducer, such as a transformer, reformer, and perceiver model in the field of ML, and the cross-attention between a byte array and a latent array to another latent array of a general transducer, such as a perceiver.

We can also use our Wireless Supercomputer (WiSer), as said in the Clarification of the 29th of April 2016.

We also quote an online encyclopedia about the subject transduction or transductive inference in the context of Machine Learning (ML): "In logic, statistical inference, and supervised learning, transduction or transductive inference is reasoning from observed, specific (training) cases to specific (test) cases. In contrast, induction is reasoning from observed training cases to general rules, which are then applied to the test cases. The distinction is most interesting in cases where the predictions of the transductive model are not achievable by any inductive model. Note that this is caused by transductive inference on different test sets producing mutually inconsistent predictions.

Transduction was introduced by Vladimir Vapnik in the 1990s, motivated by his view that transduction is preferable to induction since, according to him, induction requires solving a more general problem (inferring a function) before solving a more specific problem (computing outputs for new cases): "When solving a problem of interest, do not solve a more general problem as an intermediate step. Try to get the answer that you really need but not a more general one."[1] A similar observation had been made earlier by Bertrand Russell: "we shall reach the conclusion that Socrates is mortal with a greater approach to certainty if we make our argument purely inductive than if we go by way of 'all men are mortal' and then use deduction" (Russell 1912, chap VII).

An example of learning which is not inductive would be in the case of binary classification, where the inputs tend to cluster in two groups. A large set of test inputs may help in finding the clusters, thus providing useful information about the classification labels. The same predictions would not be obtainable from a model which induces a function based only on the training cases. Some people may call this an example of the closely related semi-supervised learning, since Vapnik's motivation is quite different. An example of an algorithm in this category is the Transductive Support Vector Machine (TSVM).

A third possible motivation which leads to transduction arises through the need to approximate. If exact inference is computationally prohibitive, one may at least try to make sure that the approximations are good at the test inputs. In this case, the test inputs could come from an arbitrary distribution (not necessarily related to the distribution of the training inputs), which wouldn't be allowed in semi-supervised learning. [...]"

Comment

The field of transducer include transformer, reformer, and preceiver models, which are based on the encoder-decoder architecture.

We also quote an online encyclopedia about the subject Self-Organizing Map (SOM): "A self-organizing map (SOM) or self-organizing feature map (SOFM) is an unsupervised machine learning technique used to produce a low-dimensional (typically two-dimensional) representation of a higher dimensional data set while preserving the topological structure of the data. For example, a data set with p variables measured in n observations could be represented as clusters of observations with similar values for the variables. These clusters then could be visualized as a two-dimensional "map" such that observations in proximal clusters have more similar values than observations in distal clusters. This can make high-dimensional data easier to visualize and analyze.

An SOM is a type of artificial neural network but is trained using competitive learning rather than the error-correction learning (e.g., backpropagation with gradient descent) used by other artificial neural networks. The SOM was introduced by the Finnish professor Teuvo Kohonen in the 1980s and therefore is sometimes called a Kohonen map or Kohonen network.[1][2 [Self-Organized Formation of Topologically Correct Feature Maps". Biological Cybernetics. [1982]]] The Kohonen map or network is a computationally convenient abstraction building on biological models of neural systems from the 1970s[3 [Self-organization of orientation sensitive cells in the striate cortex. Kybernetik. [1973]]] and morphogenesis models dating back to Alan Turing in the 1950s.[4 [The chemical basis of morphogenesis. [1952]]]"

Comment

The document titled "Symbol Grounding Transfer with Hybrid Self-Organizing/Supervised Neural Networks" was publicated in the year 2004 and is based on

- is based on cognitive attention on the one hand and

- includes self-attention on the other hand, and also

- includes Query-Key-Value (QKV) attention, which applies query, key, and value networks, which again are typically for a Multi-Layer Perceptron (MLP),

{correction and better explanation required}

and

- is based on an Artificial Neural Network (ANN),

- belongs to Supervised Learning (SL) methods insofar as the goal is to generate a classified output from the input,

- is viewed as an intermediate form between supervised and unsupervised learning, and

- is similar to the Transfer Learning (TL) and Semi-Supervised Learning (SSL or SemiSL) methods.

German version since 3rd of November 2020; English version since 9th of June 2021

{end of correction and better explanation}

The word2vec technique for NLP translates words into numbers respectively produces word embedding vectors by using ANN and retaining syntactic and semantic proximities respectively associations of words.

The technique has been developed further in variants, which produce contextual word embbeding vectors and contextual string embbeding with context2vec and for example by retaining partial language modelling prediction and next sentence prediction.

See also the

Our Evoos is based on cybernetics, bionics, self-organization, Artificial Life (AL), ML, ANN, multi-layer and meta-layer structure or architecture, was created for these technologies, applications, and services, or even created said integrated subfield of SB.

See also the so-called transformer, reformer, and perceiver models in the field of ML.

Our Evoos was created further with our Ontologic System (OS) with its Ontologic System Architecture (OSA), which integrates for example

- drawing,

- painting,

- modelling,

- rendering, and

- raytracing.

Our Evoos also describes more technologies (see for example the related note Google NTM, DNC, Transformer, BERT, and Perceiver are based on Evoos and OS of today).

Generative Pre-trained Transformer (GPT) is based on the

- word embedding technique,

- attention mechanism, and

- Self-Supervised Learning (SSL or SelfSL),

and one of the variants

and therefore it is based on either

But with 2D and 3D, as implemented with the program DALL-E, which is a multimodal implementation of GPT-3 and generates images from textual descriptions, we are even deeper in the legal scope of ... the Ontoverse (Ov), aka. OntoLand (OL).

See for example the

- OntoBot and

- OntoBlender,

and the

of the webpage Links to Software of the website of OntoLinux).

Implementing respectively reproducing and publicating respectively performing our copyrighted works of art respectively properties in whole or in part as Free and Open Source Software (FOSS) and Free and Open Source Hardware (FOSH) will have serious consequences for all responsible entities.

We already said that sooner or later Elon Musk will end in jail for many years and some other very well known persons should also focus on cleaining up the whole mess before we will do in the not so far away future.

By the way:

20.June.2022

03:35 and 05:55 UTC+2

Google NTM, DNC, Transformer, BERT, and Perceiver are based on Evoos and OS

*** Revision - better explanation somehow ***

Google has implemented respectively reproduced and publicated respectively performed essential parts of our copyrighted works of art titled

and created by C.S. for the so-called

It seems to be that Alphabet is still learning and taking our Evoos and OS as source of inspiration and blueprint.

See also for example the

which discusses the fields of

in relation to our Evoos and OS, and also all these performances and reproductions of them.

05:55 UTC+2

Success story continues and no end in sight

*** Revision - better explanation somehow ***

We got a lot more evidences that we have revolutionized everything with our original and unique work of arts titled

and created by C.S., as can be easily seen with companies and their technologies, goods, and services based on our fields of HardBionics (HB) and SoftBionics (SB), such as for example

- Neural Turing Machine (NTM),

- Differentiable Neural Computer (DNC),

- Transformer Machine Learning (ML) model,

- Bidirectional Encoder Representations from Transformers (BERT), and

- Perceiver transformer,

- Generative Pre-trained Transformer (GPT) and

- DALL-E image generator,

- Turing Natural Language Generation (T-NLG),

- GPT-3 Application Programming Interface (API), and

- other technologies, goods, and services,

But we must caution about the basic brute force approach, because the resulting models, generators, systems, applications, etc. are not validated and verified, and thererfore not trustworthy, and validating and verifying them is a totally different and much more expensive task, if they are validateable and verifiable at all.

In fact, they are like children, who have got a matchbox and are now playing with fire, and it is already jumping out of the rails.

By the way:

21.June.2022

01:00 and 11:00 UTC+2

Summary of website revision

We have added to the note OpenAI GPT and DALL-E are based on Evoos and OS of the 19th of June 2022 corrections and quotes of

and

to give more informations and explanations in relation to the so-called transformer, reformer, and perceiver.

The notes

might become a clarification or be moved to the one or more related clarifications.

While looking at the matter, we had the impression that Self-Supervised Learning (SSL) is presented as of being quite new.

Indeed, we noted that the

A further research after the origin of SSL provided two sources

which means Natural Sound Processing (NSP),

for unsupervised learning, and

for self-supervised learning. Note that Long-Short-Term Memory (LSTM) based on Recurent Neural Network (RNN) was also developed at the Technical University Munich which is the reason why Alphatbet (Google), Apple, Amazon, and Co. collaborated with it and not with us. So we already have the next stealing of our property in this field, because those entities do not know what to steal before we showed what we do.)

A further research of

gave

Note that

- Self-Organizing Map (SOM) is also based on Artificial Neural Network (ANN) and

- Geoffrey Hinton is Hinton, G.E..

But a SOM only belongs to the Unsupervised Learning (UL or USL) part of SSL and prior art is based on a hybrid and modular connectionist model.

The work is based on a hybrid and modular connectionist model consisting of an unsupervised Self-Organizing Map (SOM) and a supervised Multi-Layer Perceptron (MLP) for categorizing and naming, and references

- Hinton, G.E., Becker, S.: An unsupervised learning procedure that discovers surfaces in random-dot stereograms. 1990.

"The simulations rely on a particular network module called the categorizing and learning module. This module, developed mainly for unsupervised categorization and learning, is able to adjust its local learning dynamics. The way in which modules are interconnected is an important determinant of the learning and categorization behaviour of the network as a whole."

This implies that SSL was created with

Our Evoos adds

A. Cangelosi also wrote at least 2 documents together with S. Harnad, who again is the author of the document titled "The Symbol Grounding Problem" from 1990.

"Schyns calls it "mapped functional modularity". His model contains an unsupervised module that categorises the stimulus set, while a supervised module connects labels to their representations. [...] However, Schyns's model is limited to the direct grounding of basic category names. No names of higher-order categories are learned via symbolic instructions, and therefore the grounding transfer mechanism does not apply. Instead, he concentrates on prototype effects and conceptual nesting of hierarchical category structures. Symbols are only used as indicators of knowledge and facilitators of concept extraction. [...] the present work builds on Schyns's [...]."

The work references the document titled "A Self-Supervised Terrain Roughness Estimator for Off-Road Autonomous Driving".

Alphabet (Google)→Google Brain and Google Research and University of Toronto, et al.: Attention Is All You Need.

The work is about the so-called Transformer and cites 2 works about self-training:

- McClosky, D., Charniak, E., Johnson, M.: Effective self-training for parsing. June 2006.

- Huang, Z., Harper, M.: Self-training PCFG grammars with latent annotations across languages. August 2009.

11:04 UTC+2

Facebook Web2vec is based on Evoos and OS

24.June.2022

08:28 UTC+2

Short summary of clarification

We have continued the work related to the Clarification of the 8th of May 2022.

We quote an online encyclopedia about the subject homoiconicity: "In computer programming, homoiconicity (from the Greek words homo- meaning "the same" and icon meaning "representation") is a property of some programming languages. A language is homoiconic if a program written in it can be manipulated as data using the language, and thus the program's internal representation can be inferred just by reading the program itself. This property is often summarized by saying that the language treats "code as data".

In a homoiconic language, the primary representation of programs is also a data structure in a primitive type of the language itself. This makes metaprogramming easier than in a language without this property: reflection in the language (examining the program's entities at runtime) depends on a single, homogeneous structure, and it does not have to handle several different structures that would appear in a complex syntax. Homoiconic languages typically include full support of syntactic macros, allowing the programmer to express transformations of programs in a concise way.

A commonly cited example is Lisp, which was created to allow for easy list manipulations and where the structure is given by S-expressions that take the form of nested lists, and can be manipulated by other Lisp code.[1] Other examples are the programming languages Clojure (a contemporary dialect of Lisp), Rebol (also its successor Red), Refal, Prolog, and more recently Julia.

[...]

Uses and advantages

One advantage of homoiconicity is that extending the language with new concepts typically becomes simpler, as data representing code can be passed between the meta and base layer of the program. The abstract syntax tree of a function may be composed and manipulated as a data structure in the meta layer, and then evaluated. It can be much easier to understand how to manipulate the code since it can be more easily understood as simple data (since the format of the language itself is as a data format).

A typical demonstration of homoiconicity is the meta-circular evaluator. [Meta-circular evaluator "The term itself was coined by John C. Reynolds,[1] popularized through its use in the book Structure and Interpretation of Computer Programs.[2][6]"

We looked once again at the Robinson diagram, because we only found

and something related to logics, models, and formal languages, specifically first-order languages, but nothing pictoral, specifcally "diagrams in the sense of pictures with arrows, as in category theory".

Eventually, it turned out that we simply have not connected the term Robinson diagram with the set of all closed literals of the signature L(sequence c of distinct new constant symbols) of an L-structure A, which are true in (A,a) in relation to the

Even more interesting, we found out the following in

"Introduction

According to [an online encyclopedia], a diagram is a symbolic representation of information, intended to convey essential meaning using visualization techniques. Although the word 'diagram' may suggest a picture, there was nothing pictorial about Robinson's use of this term in model theory. Nonetheless, Robinson's diagram is a symbolic representation of information. [...]

^3 In 1915, Leopold Löwenheim proved that if a first-order sentence has a model, then it has a model whose domain is countable. In 1922, Thoralf Skolem generalized this result to whole sets of sentences. He proved that if a countable collection of first-order sentences has an infinite model, then it has a model whose domain is only countable. This is the result which typically goes under the name of the Löwenheim-Skolem Theorem.

^4 If a countable first-order theory has an infinite model, then for every infinite cardinal number k it has a model of size k, and no first-order theory with an infinite model can have a unique model up to isomorphism.

^5 Skolem showed the weakness of formal language by means of a suitable construction of proper extensions of the system of natural numbers PA. This extension has the properties of natural numbers to the extent that these properties cannot be expressed in the lower predicate calculus in terms of quality, addition, and multiplication. These extensions of natural numbers are called 'the nonstandard models of arithmetic'. In addition, the Löwenheim- Skolem theorem showed that a collection of axioms cannot determine the size of a model: Every collection of axioms having an infinite model also has models of every infinite cardinal. An example of a nonstandard model of arithmetic is:

....... 1,2,3,4.... 1,2,3,4.... 1,2,3,4.... 1,2,3,4....

[...] Robinson's philosophical point of view, which linked epistemology, formal language and existence.^6 Using formal language and logic as tools, together with the philosophical position that links semantics and syntactic[s] on one hand and epistemology, formal language, and existence on the other, will enable us to preserve the certainty of the classical notion of truth and reference without postulating non-natural mental powers.^7

The empirical perspective

[...]

Diagrams as an intersection of semantics and syntax

[...]

^6 Meaning the intended model will be the elementary sub-model of all the nonstandard models.

^7 Since Robinson was concerned with objectivity and therefore in objective concepts, he was very interested in methods for completing formal systems and defining tests for verifying their completeness.

[...]

8 Of course, there is no technical impediment to defining these enormous languages. But model

theory in this context is regarded as merely a branch of pure mathematics, and therefore there is

no real reason to worry about any of this.

Diagrams as a tool for pointing at objects

[...]

Model complete

[...]

Prime model

[...]

The prime model is unique.

[...]

Diagram, persistence, prime model M0 and the transfer principle

[...]

Summary And Conclusions.

This paper presents a possible way to address Skolem's criticism of formal languages using Robinson's tools, taken from model theory, such as diagram, model complete and prime model. The existence of different models that are not equivalent even to a complete formal system K is very disturbing, because the immediate consequence is that it is not possible to uniquely describe what a natural number is using the formal language L.

Robinson believed that symbols in the formal system have a meaning that we cannot avoid. As he regarded semantics to be a part of mathematics, it was therefore possible and important for him to unite semantics and syntax into a single formal system.

Robinson called this formal system 'a diagram'. Robinson thought of a diagram as a link between a formal system and its model. When a set K of axioms is complete, then K together with its diagram create a syntactic reflection of this model. According to Robinson, sometimes there is no distinction between syntax and semantics, since one may even assume that the relations and constants of the structure belong to the language and denote themselves (Robinson 1956, [Complete Theories,] 6).

[...]

Robinson's diagram has rightfully earned the title 'diagram' since it symbolically represents the syntactic as well as the semantic information of a complete set of axioms K and its intended model M.

Robinson believed that one of the goals of mathematics should be a deeper understanding of its concepts. Perhaps a more profound comprehension of these notions will eventually lead to advancement in the philosophical understanding of logic and mathematics, concepts which in recent years have been overshadowed by technical achievements.

According to Robinson, logic serves as wings to mathematics, allowing it to fly. (Robinson, 1964a, [Between Logics and Mathematics,] 220). I hope that the discussion presented here regarding Skolem's critique of formal languages is an example of this saying."

Comment

Robinson also did what Günther did in relation to symbols and languages, and their foundations and interpretations. But they took different directions and developed different approaches with the

We also looked at the mailing list archive of the TUNES project and got the following facts about the author and the Arrow System

He claims to be "a self-taught programmer and amateur mathematician who has been using your TUNES project's documentation to help with my intense research for the last 2 years. [...] I can say, after about three years, that I have fully covered the sites and issues that your TUNES documentation and reviews mention."

This is not about Object-Orientation (OO) in the first place, but already about cybernetics, kenogrammatics, proemiality, and polycontexturality respectively subjectivity, as well as Model Theory (MT) and other fields, as it becomes obvious in later emails. But we note that he is even talking about matter related to complexity and Algorithmic Information Theory (AIT).

1st of January 1999 "more specifics: at one point of view, the system will be a big persistent heap of Self-like objects, each with hyperlinks into other objects within. [...]"

1st of January 1999 "btw.. hyperlink is just a generalized term for pointer. it's a reference that only needs the details required for completion of the reference from within the target, not by the subsystem.

[...]

do you want me to write code FOR you? the arrow language is so simple that it has already been described again and again by myself. the system is merely supposed to guarantee the consistency of the arrow system up to the point of user interface. the Vocabulary development should be relatively independent of the implementation from this respect, and Vocabulary is the chief benefit of such a system as this.

[...]

i can't describe the arrow language without thinking about how to make such a system from arrows instead of a regular computing language, so that the implementation details which i suggest are only for my thoughts about the point of total (bootstrap and OS level) reflection."

2nd of January 1999 "i've already specified the arrow language! the only thing left is vocabulary! can't you understand that? it's not some arbitrary computer programming language where the concepts are opinion-based.

in case you missed it, here is the language, everyone:

arrows are abstract objects with N slots, the "default" being 2. iteration on the default arrow type yields multi-dimensional arrow types. each slot is a reference to an arrow. all arrows are available for reference.

THAT'S IT! everything else is vocabulary which builds conceptual frameworks. if you are looking for more specifics on the definition of the arrow language, LOOK NO FURTHER!

you people really are dense.

this is the largest container for semantics ever devised! it's obviously very much bigger than you can imagine.

witness a recent statement from the discussion: the way to achieve tunes is not to add code, but to take code away from a specification."

In the document version 8 "A formal metaphor for specifying arrows should consist of viewing arrows as data structures with exactly two slots that are ordered". But this is the description of Günther's proemiality and polycontexturality, and together with Robinson diagram we get Günther's abstract object called kenogram and, as we explained, the proemial relationship can be drawn as 2 nodes interpreted as 0 and 1, and connected by 2 directed arrows respectively a pair of directed arrows with the one arrow going from 0 to 1 and the other arrow going from 1 to 0. The resulting graph shows at the same instant of time the simultaneous relationship of two slots, two signs, and relator 1 and relatum 0, and relator 0 and relatum 1.

24th of April 1999 "Announcement:

I posted Brian's Arrow paper draft on the TUNES web site. The link is http://www.tunes.org/papers/Arrow/ (also ftp://ftp.tunes.org/pub/tunes/papers/)

For those who don't know, the Arrow System is Brian Rice's idea of a TUNES-like system. You can post comments about it to the tunes list."

The date of the first publication of AS matches the internal date of the version 8 of the document 24th of April 1999.

26th of April 1999 "Arrows n=m+1 example

>'n', 'm', and '1' would be arrows (selectors) from sets of atoms:

>'n' and 'm' would be part of a particular user context vocabulary

>(called an ontology), and '1' would be an arrow from the set of

>natural or integer or whatever kinds of numbers (again, in a graph).

>this looks like a good place to start discussion... any comments?

It is easy for me to imagine a '+' graph or a '=' graph because I know intuitively that the concepts behind these need to be linked (by arrows) to their arguments.

But I have a problem to see 'n', 'm' and '1' as sets of arrows. Sure these are sets of 'things' but it is strange for me to make these things arrows. It seems to me that the arrow system must come to a point where it does not reference arrows of a graph, but simply 'things' of a set of 'things'.

So it seems more intuitive to define an arrow as having two slots that can reference either an arrow or an 'atom (or object)'. I guess this must be wrong for you, since it would means to lose the homo-iconic property. But I don't see by myself yet why it would be so bad.

This example have shown to me that graphs (set of arrows) are the way semantics get bound to arrows. I now tend to see the evaluation like an actor that add an arrow to the 'evaluate to' graph. By example an actor to add natural numbers, that do its job by looking inside the '+' graph for arrows whose both slots arrows are member of the 'natural numbers', and if so, add the two numbers and build an arrow with slot 0 referencing the arrow in the '+' graph, and slot 1 with a reference to the sum of the two numbers. This newly created arrow is then added to the 'evaluate to' graph.

For me these numbers are just element of a set of objects. For you they are element of the set of 'natural numbers'. But then how does an arrow of this set became bound with the, let's say, five semantics.

In my way of defining a slot of an arrow as either referencing an arrow or an object, I simply reference to a record that say where to find the object, what size it has and there I would find the sequence of bits 00000101."

See the comment to the next quote.

27th of April 1999 "once again, READ THE DRAFT! ontological relativism is the concept of going against the "levels of abstraction" metaphor and the HIERARCHY that it implies! i thought that tunes was against hierarchies because of the ideas of cybernetics and their universality. obviously, i was terribly wrong."

Indeed, the Arrow System is baseed on

- Günther's Kenogrammatics, Proemial Relationship Model (PRM), and PolyContextural Logic (PCL),

- Contextual Logic (CL or ContextL), calculus of context, or formalized context, formalized contextual dependence, or context transcendence formalization, or context as formal object,

here collectively referred to as ontological relativism.

Instead of writing all the related chapters of that draft some few references to the original works would have been sufficient.

As others and we already explained in relation to these fields, this idea of ontological relativism has a symbol grounding problem.

27th of April 1999 "> I think we should all look at Cognitive Science research (and similar

> sources) before we program our object system. I say it should be based on

> _ordinary_ human thought constructs, not mathematics or computer science.

>

that's a great idea. why don't we program everything in english, since it's so natural? oh, wait. then we'd have to make an entirely different system for russians, or germans, or the eskimos, since their idea of human thoughts might be different from ours. the idea that _ordinary_ thought processes are enough for computer systems is as ludicrous as saying that because COBOL was closer to human language than any other of the early programming languages, that it was therefore the best among them.

bottom line: it's against the ideas of utilitarianism (software re-use, etc.) to try to "force" anyone's idea of common sense representations into a computer."

Thank you very much for clarification in relation to the field of Cognitive Computing (CogC), because the Arrow System and the TUNES OS are just only about ordinary computing, specifically graph processors and Graph-Based Knowledge Bases (GBKBs). Oh, Knowledge Graph (KG).

27th of April 1999 "[...]

ok. the "shifting" of contexts was supposed to relate to the papers by John McCarthy [and Saša Buvač] called "Formalizing Context (Expanded Notes)" (i lost the URL [www-formal.standford.edu], but i'm sure that the tunes site has it somewhere). so that related to interpreting information gained by one computation for the use of another, unrelated computation."

So this explains the whole case more.

John McCarthy: Notes on Formalizing Context. 1993.

John McCarthy and Saša Buvač: Formalizing Context (Expanded Notes). 1998 and 28th of February 2012.

27th of April 1999 "> I think we should all look at Cognitive Science research (and similar

> sources) before we program our object system. I say it should be based on

> _ordinary_ human thought constructs, not mathematics or computer science.

>

that's a great idea. why don't we program everything in english, since it's so natural? oh, wait. then we'd have to make an entirely different system for russians, or germans, or the eskimos, since their idea of human thoughts might be different from ours. the idea that _ordinary_ thought processes are enough for computer systems is as ludicrous as saying that because COBOL was closer to human language than any other of the early programming languages, that it was therefore the best among them.

bottom line: it's against the ideas of utilitarianism (software re-use, etc.) to try to "force" anyone's idea of common sense representations into a computer.

> Personally, the idea of a type/class system is pretty alien to me. My

> world

> consists of only 'objects'. Some 'objects' are very concrete: pen,

> pencil,

> keyboard, phone... Others are more vague (abstract): writing implement,

> thing, idea, Tunes :), letter, song... All these 'objects' relate to one

> another in different ways. Who needs types when we have _relations_? We

> can say "a pencil _is_ a writing implement" or "a pen _is like_ a pencil"

> or

> "2 _is not_ a letter". As far as I know, that's how I represent things in

> my head, and that's how other people do it too. Does anyone here do it

> differently?

>

let's assume that we don't think differently, and that we make something upon which everyone can agree. have we accomplished the tunes goals? i say no. i say that tunes should be dynamically extensible by any user in a simple way _for any purpose_. no system today even remotely approaches this quality.

> If different peoples' minds are somewhat incompatible, we should find out

> how they're the alike, and make our object system flexible enough to

> accomodate everyone. At the same time, it should accomodate computers, by

> being fairly efficient... we might have to take shortcuts.

>

wow! i never thought of _that_ before! let's hack together some programming system / OS that just works. i'll bet that no one has tried that before. (intentional sarcasm)"

28th of April 1999 "

> [ snip ]

> > ok. the "shifting" of contexts was supposed to relate to the papers by

> John

> > McCarthy called "Formalizing Context (Expanded Notes)" (i lost the URL,

> but

> > i'm sure that the tunes site has it somewhere). so that related to

> > interpreting information gained by one computation for the use of

> another,

> > unrelated computation.

> > the searching problem, as you state it, is another issue. i think that

> the

> > solution could be found by a simple idea: suppose we take some

> data-format

> > (a state-machine algebra) and define its information in terms of arrows.

> it

> > should be easy, then, to store most of the arrow system's information in

> > terms of that data structure, based on the efficiency of the encoding

> > (information density, search-and-retrieval times, etc). we could then,

> for

> > instance, store information in syntax trees (like LISP) and retrieve it

> in

> > the same way, constructing arrows for the information iteratively. this

> > could even allow us to gain new information from, say, Lisp or Scheme

> source

> > code.

>

> I'm not sure I get it. You want to create a state-machine that, the same

> way one can check the presence of e.g. a string, check the "presence" of

> a valid system state? It sounds too simple, but it's an interesting

> idea! Are you sure you wouldn't need to build a new machine for every

> request?

>

actually, i'm sort of _interested_ in building a new machine at every request, but also in having the system be optimized for doing so. with arrows, their graphs can be used to build state-machines, possibly infinite in size (which means that abstract language models can be built). of course, the idea of having state-machines dynamically instantiated on request begs that we use partial evaluation and persistence to create these earlier, such as when we define the data-format to the system. then the question is where to place this information and how to re-use it effectively.

> > > Now, where I'm getting at is: What kind of computational model do you

> > > propose? ( that is: how will the system in practice process requests

> > > like "what is n?" ?) It would be interesting, in the context of arrows

> > > beeing a very general form of data organisation, to see what

> complexity

> > > it would have, and what compromizes ( if any ) and restrictions ( if

> any

> > > ) it would have.

> > >

> > in this case, i would generally propose lambda-calculus, which is

> acheived

> > quite readily by the arrow system when you make category diagrams.

> category

> > diagrams are just arrow graphs where all arrows compose sequentially.

> the

> > arrows represent lambdas, and the nodes represent expression types.

> > otherwise, i believe that ontologies and algebras could help to define

> any

> > execution scheme that a person could imagine, even complicated ones.

>

> If I understand correctly, then arrows in their raw form must be

> interpreted in some context. E.g. when you say above that

> lambda-calculus can be achieved by regarding the arrows as those in a

> category diagram. Other examples are object diagrams to model the state

> of a system ( how the actual instances of things are connected together

> ), and state diagrams to model the transitions between different states

> in the system.

>

> I think this is where coloring of arrows, as June Kerby talks about,

> comes in. In that scheme, you would say that a category diagram style

> arrow is one color and a state transition style arrow is another. In the

> general case, you would need one mother of a pallette. I guess you

> wouldn't want coloring in your model, but still the problem of "what

> does the arrow connecting these two enteties mean?" must be addressed in

> some way.

> There is however an alternative to coloring, that better fits the style

> of your model, and that is contexts, saying that the color of the arrow

> is dependent on the situation.

>

yes, and if you replace "color" with "meaning", then you'll find a somewhat complete answer in the arrow draft. (sections 2.2.2, 4.6, and 4.7, i believe)

> But there are some problems with this that you might not want. First of

> all the "situation" is dependent on the evaluation of something and then

> you need context switches - but these context switches, beeing all

> arrows, need some meta context switch in order to apply the proper

> interpretation. And there will be _alot_ of context switching.

>

the arrow context switch could be contained by a single graph, or most likely a multitude of graph structures in order to provide a framework for reasoning.

> The second problem ( I personally see this as a property and not a

> problem, but I know Brian is against hierchies ) is that contexts,

> always working on homoiconic arrows ( no coloring ), eventually end up

> forming a hierchy. I.e. one context, formed by the area of switch-on to

> switch-off, _must_ be contained fully inside or fully outside any other

> context.

> Imagine contexts 'S', for "legal state transition", and 'C', for "is in

> the same category as". I'll denote a context with labled parenteses,

> i.e. '(C' and 'C)'. Small letters are things to be interpreted. Now, if

> I say:

> (C a (S b C) c S)

>

> Then, given that the system is homoiconic, there is no-way to give any

> meaning to 'b'. Choosing to view 'b' as talking about state transition,

> gives you a 50% chance of failure - it's ambiguous.

>

yes, but then you're proposing the "push"/"pop" model anyway, which suggests a stack immediately. if you view it another way, then context-shift designators "(C" and "C)" enclose an ordered pair "(a,b)", which an arrow could represent. likewise, the state-transition designators "(S" and "S)" do the same for "(b,c)". by placing these graphs under the appropriate deterministic logic, meaning _can_ be derived, but it is really two completely separate meanings instead of a necessarily singular meaning. now, those two meanings _might_ be contradictory, but that would depend on the ontology: on how you decided to interpret what C and S meant in a given context. of course, if "b" is an atom in a context, then the interpretations of C and S should not be at the same order of abstraction in order to avoid the ambiguity.

also, i'd like to relativize any concept, such as S="is a legal state transition". i'd like to place that in an environment (like Tunes) where generalizations can be readily made in a semantically clean way. my idea is that perhaps what passes for legal state transitions in one system means something completely different to another system or context. perhaps one acts as the machinery for the other, so that state transitions become parts of operators. or maybe the ontologies completely crosscut each other, so that it's hard to express verbally what the difference is.

> If, on the other hand the system was not homoiconic, i.e. two different

> types of arrows, then one can imagine that 'C' effected another type of

> arrow then 'S'. That could work, but there would be inpureness or

> sideeffects, and code would need to be veryfied at a level prior to

> reflection - and you don't want that.

>

> Conclusion ( please flame me if I'm wrong ) : In a homoiconic system,

> the need for interpreting information dependent on context, enforces

> some hierchical property on the system.

>

i don't agree, of course. just take a look at the draft. you'll see that it describes contexts as identifying agents (i mean all the aspects of agents that you would want to apply) with structures of ongologies [ontologies] called "ontology frames". the frames are basically collections of nodes in an ontology graph, overlaid by a structure that i haven't looked into yet. the idea is that a context has a boundary, and that interpeting information from the outside of it requires some translation process from an exterior ontology to one of its own ontologies (represented by an arrow). within the context, perhaps the translations should be computable and completely defined, but that seems unnecessarily strict, since they should / could be used to build those transitions.

the big idea, i think, is the use of a graph of ontologies with information interpretations between the ontologies as nodes. this graph will most certainly contain higher-order infinities of nodes as well as translations, but should be managable. the intention was to get around the strange properties of set theory, but the applications may be much wider in scope (i'm guessing)."

At this point one can see that ontology seems to be understood as a synonym for context and information filter. See also the email of the 3rd of May 1999 for the further discussion of the terms context, agent, ontology frame, and ontology graph. See also the related comments below.

We also note that specific aspects of agents are not specified, which are not related to general properties of the fields of Information System (IS) and Knowlege-Based System (KBS).

29th of April 1999 "On Wed, Apr 28, 1999 [...] Rice Brian [...] wrote:

[blah blah... petty insults deleted]

[...]

> mumbo jumbo? hofstadter? you think reading hofstadter will help you

> understand the discussion? can't you think for yourself, instead of

> disagreeing with everything that you hear? can't you give me a chance?

> can't you even look at the references on the Tunes review pages?

Yeah, even Hofstadter is slightly more understandable than your arrow paper, I'm sorry to say. If you've got something there, only a really bright person with an extensive CS background would recognize it, the way you've written it. Likewise, it would take such a person to recognize your paper as mumbo-jumbo, if that's what it is. If you want *my* support, get out of this mindset "If you can't understand my great scientific work, you must be a moron" and write something I can read without losing consciousness! Just tell me the gist of it, and let me use my imagination. You've got to convince me that reading your paper isn't a complete waste of time."

What should we say? :D Read the whole Clarification and related notes, explanations, clarifications, and investigations.

We do apologize for being boring with our mumbo jumbo, but we must go through this blah blah blah and high tech stuff at the edge of the imaginable to get to the ground and then climb up again.

4th of June 1999 "this is an old post by some months, but i thought that i should comment.

> This comes from the new preface to the 20 anniversary Edition of

> Godel, Escher, Bach. It made me think of Tunes and all our continued

> discussion of reflection as a primary mechanism.

>

> ...one thing has to be said straight off: the Godelian strange loop

> that arises in formal systems in mathematics (i.e., collections of

> rules for churning out an endless series of mathematical truths solely

> by mechanical symbol-shunting without any regard to meanings or ideas

> hidden in the shapes being manipulated) is a loop that allows such a

> system to "percieve itself", to talk about itself, to be "self-aware",

> and in a sense it would not be going to far to say that by virtue of

> having suh a loop, a formal system _acquires a self_.

>

> - Douglas Hofstadter

>

while i'm not sure from this statement what exactly the "strange loop" is, i do know what the statement identifies: a mathematical model of a theory that extends below the level of logic. in other words, it's not just an inference system, it's the logical activity beneath: the activity of, say, the processing machine involved. otherwise, the logical structure would confer some small meaning on the shapes generated (as is true of a Robinson diagram or positive diagram in model theory).